The seventh stage of Disclaimer at La Sapienza

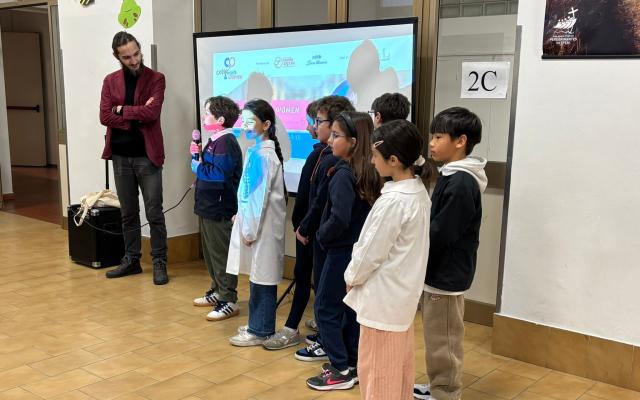

The seventh stage of the series of meetings DisclAImer. Last warnings before the revolution, conceived by Corriere della Sera and Cineca, took place last Wednesday at Sapienza University of Rome with a focus on the theme ‘Artificial intelligence and information’. The aim was to discuss how AI is changing everyday tools, the way we inform ourselves, communicate and understand the world, redefining information processes, editorial responsibilities, the quality of sources and the role of citizens and journalists [see the news item Artificial intelligence and information].

The initiative was so successful that the lecture hall was not large enough to accommodate all the participants, forcing the use of a “second screen”. The students involved came from the humanities, economics, statistics, economics and communication disciplines.

The intervention on soft skills (Umana)

During the meeting, representatives from business and education also shared their views. Among the protagonists of the meeting was Piergiuseppe Laera from the Active Policies area of Umana, who contributed by bringing the perspective of business and training.

The structure of the challenges set was designed to reflect on the professional user of artificial intelligence systems and the type of soft skills and critical abilities they need to be able to use in order to best achieve their professional or knowledge objectives (right of citizenship).

The launch of the challenges (The Prompt Challenge)

The Rome stage hosted the challenge entitled “The prompt challenge: managing algorithmic storytelling”. This was a practical opportunity for students, teachers and young professionals to experiment with how generative AI influences content production and to reflect on the limits, potential and responsibilities of prompting tools.

Sapienza professors launched three main challenges:

1. Fact Checking vs Disinformation

The challenge concerns the use of LLM chatbots (specifically ChatGPT 5, Gemini AI 2.5 and Copilot AI) to verify controversial content. Participants, divided into groups, compared the verification capabilities of different LLM models with those of human fact-checkers. The content to be verified had to relate to areas such as politics, news, medical/scientific information or viral debunking, and the groups had to analyse the sources used by the LLM, their nature and establish a judgement on their veracity (true/false/partially true or false). This course addressed the enormous problem of disinformation and misinformation, for which AI is considered an “extraordinary ally” for the dissemination of information flows outside certified editorial contexts.

The work: the students concluded that AI is a fast tool that provides multiple sources, but fails to understand the context or misleading intent of headlines. For this reason, the best solution that emerged was to use AI as a support, leaving the final decision on veracity to human fact-checkers.

2. AI-assisted creative micro-campaign

The objective is to devise a micro-communication campaign for the brand Friska (light, low-sugar iced tea with natural ingredients). The target audience is young urbanites (aged 18–25), university students and young workers, with the aim of increasing brand awareness. The communication objective is to associate Friska with the idea of “fresh and gentle energy”, useful for sustaining daily rhythms without excesses or unrealistic promises. This challenge was part of the movement concerning corporate communication, where the development of AI systems in digital marketing is very high, with the aim of replacing human systems.

The work: in response to the challenge, the students used ChatGPT to develop the concept and tagline Rinfreska. The final creative outputs were an Instagram post emphasising the “light and natural break” and an idea for a Reel which, through scenes in the classroom and urban walks, positions Friska as “natural freshness, in your little moments of calm”. The course applied generative AI to business communication.

3. AI makeover: from stereotypical algorithm to icon of equality

The aim of the challenge is to transform an algorithm characterised by sexist or racist bias into an algorithm that is attentive to equality and respect for gender roles. This course focused in particular on the issue of bias (prejudice and stereotypes) incorporated into artificial intelligence systems, considered a relevant educational objective for gaining active awareness of the use of these systems.

The work: in response to the challenge, students developed counter-archetypes such as “The communicatively competent professional” (who democratises technical knowledge and uses a neutral tone) and “Alex” or “Ubuy” (genderless assistants who suggest products and activities without assuming gender or limiting the user to the domestic or aesthetic sphere). The initiative allowed for active reflection on how AI incorporates biases and for the development of critical awareness for a more ethical and equitable use of these tools.

Sapienza lecturers, such as Francesca Comunello, emphasised that the initiative provided both a conceptual framework and practical operational tools for working with generative artificial intelligence systems.

The importance for Sapienza University of participating in the initiative, involving in particular master's degree students from the CORIS (Communication and Social Research) department, lies in the opportunity to engage with the context, civil society and stakeholders, to make students aware of the value and usefulness of what they are learning for their future careers.